According to new analysis from GTIG, attackers are building LLMs straight into their tools. AI is no longer a side helper. It sits inside the attack itself.

GTIG mapped several examples. For instance, PROMPTFLUX uses a Gemini-powered module that repeatedly asks the model to rewrite its own code so it looks different each time it runs. In addition, PROMPTSTEAL uses a Qwen model hosted on Hugging Face to generate fresh Windows commands, gather system details, and ship everything out. Finally, families like QUIETVAULT and FRUITSHELL use prompts on the infected machine to search for tokens or sidestep AI-driven defenses.

Google also reports that attackers regularly try to “sweet talk” guardrails. They pretend to be students working on cybersecurity labs or researchers studying malware behavior. With enough tries, they find phrasing the model accepts.

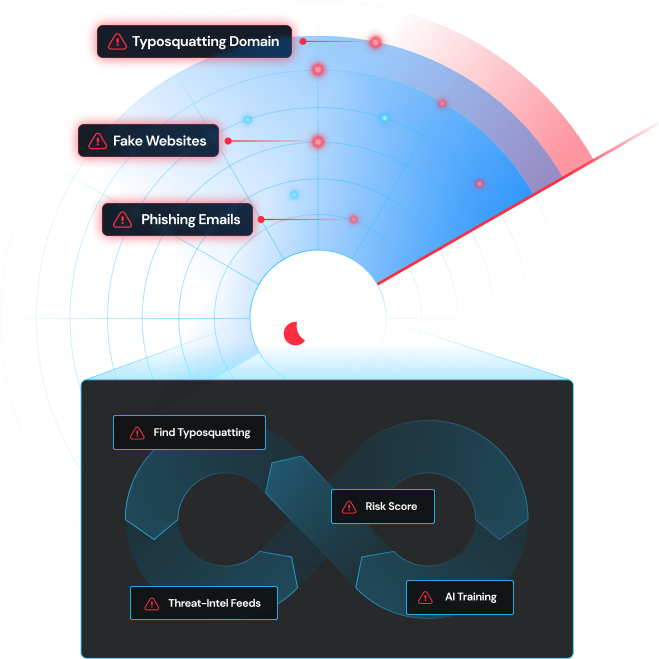

All of this feeds a growing underground market where criminals share AI flavored phishing kits, stealth tools, and automation shortcuts. With these tools, even inexperienced actors can produce emails that sound legitimate, spin up spoofed domains that look polished, and launch malware that adapts on the fly. This unsurprisingly creates the perfect setup to impersonate brands.

How to keep your brand safe:

- Watch for unexpected model traffic

If machines that should never talk to LLM APIs suddenly do, investigate. - Rely on behavioral detection

Shape-shifting code will not match signatures. Look for strange process changes or unusual scripting behavior. - Train teams for AI-polished phishing

These messages are cleaner. Shorter. More believable. Make sure employees know that perfect grammar is not a sign of safety. - Add AI-generated lures to red team drills

Test how your staff reacts to spoofed domains or emails crafted with an LLM’s help.

Expand domain and brand monitoring

Watch for near matches, homograph tricks, and sudden spikes in suspicious registrations.